December 12, 2016 / by / In deeplearning

NIPS 2016

tl;dr: AI is taking over the world, except maybe in healthcare.

Pointers to slides from some of the talks are available at the end of post. If you have some to add, shoot me a message or leave a comment and I’ll try to update the list.

The NIPS Experience

This was my first year at NIPS and there was a lot to see and do. Each day was pretty jam-packed with content so I’m sure that I’ve already forgotten some of what happened. This is my attempt at a summary, incompletely remembered and partially explained.

My first impression is that it’s getting pretty difficult to call NIPS an “academic” conference in the traditional sense, as there is a heavy industrial presence from the likes of Google (both Brain and DeepMind), Facebook, Microsoft, Amazon, etc. In my unofficial and totally non-scientific straw poll of attendees, it appeared that the number of badges sporting industrial affiliations outstripped those with academic ones by a pretty hefty margin. Of course, this transformation has been happening for a while a while, but I was still struck by the scope of industry’s presence at NIPS this year.

The second impression is that NIPS has become the venue for big releases and announcements from tech companies working on ML. Some notable announcements that coincided with NIPS include:

- OpenAI released their Universe platform in a blog post on the opening day of NIPS. You can think of this as the ultimate sandbox for developing a reinforcement learning agent. Universe gives you programmatic access to hundreds of video games (Portal, GTA V, etc) and sends you the raw pixels, which your RL agent can use to assess the current state of the world and send back commands to be executed in the video game environment. Ilya Sutskever gave a nice overview of Universe during his talk at the RNN symposium on Thursday. He discussed the potential for ‘meta-algorithms’ where you drop the agent into a random game, have it learn for a few episodes, and then drop it into a new game and repeat this process over and over. Hopefully the agent will start to learn skills and tactics that generalize across different environments the way humans do. Universe makes this kind of experimentation easier than it’s ever been before and I’m sure we’ll be seeing a lot of really cool papers that use Universe in the next few years.

- DeepMind open-sourced their platform for experimenting with RL agents on the same day. So much for having the “we’re open-sourcing our reinforcement learning platform” news cycle to yourself. DeepMind lab provides more 3D games for your RL agent to learn from.

- Uber announced they had acqui-hired Geometric Intelligence in order to expedite their own AI efforts (this is in addition to CMU’s robotics department they previously poached).

- Apple announced that it was joining the rest of the community and would begin to publish it’s research and make source-code available. They recently hired Russ Salakhutdinov to run their AI lab, and I’m sure he convinced Apple that getting the right people would be tough if they weren’t allowed to publish. In their recruiting flyer they explicitly say that you will be allowed to publish your research as a member of the Apple team. It will be interesting to see if this new found openness creeps into any other part of their traditionally secretive corporate culture.

I guess my third (and final numbered) impression of NIPS is that there’s still a feeding frenzy for recruiting talented people with deep learning and ML chops. In addition to the usual ML suspects like Google and Facebook, there were automotive, financial, and e-commerce companies all looking to grow their internal ML groups. It’s hard to imagine that there has been a better time to be a ML researcher who is on the job market. You will probably have a lot of options, and therefore a lot of leverage.

Ben Hamner summarized the recruiting environment at NIPS like this:

#nips2016: machine learning research conference or massive head-hunting free-for-all? You decide.

— Ben Hamner (@benhamner) December 6, 2016

It’s not uncommon to see startup founders and CEOs of AI companies walking around and intermingling with attendees at NIPS. Jack Clark overheard someone say that the feeling at NIPS is starting to feel a lot like another retreat that has become a favorite of the tech elite:

OH at lunch at OpenAI: "NIPS is becoming like Burning Man"

— Jack Clark (@jackclarkSF) December 7, 2016

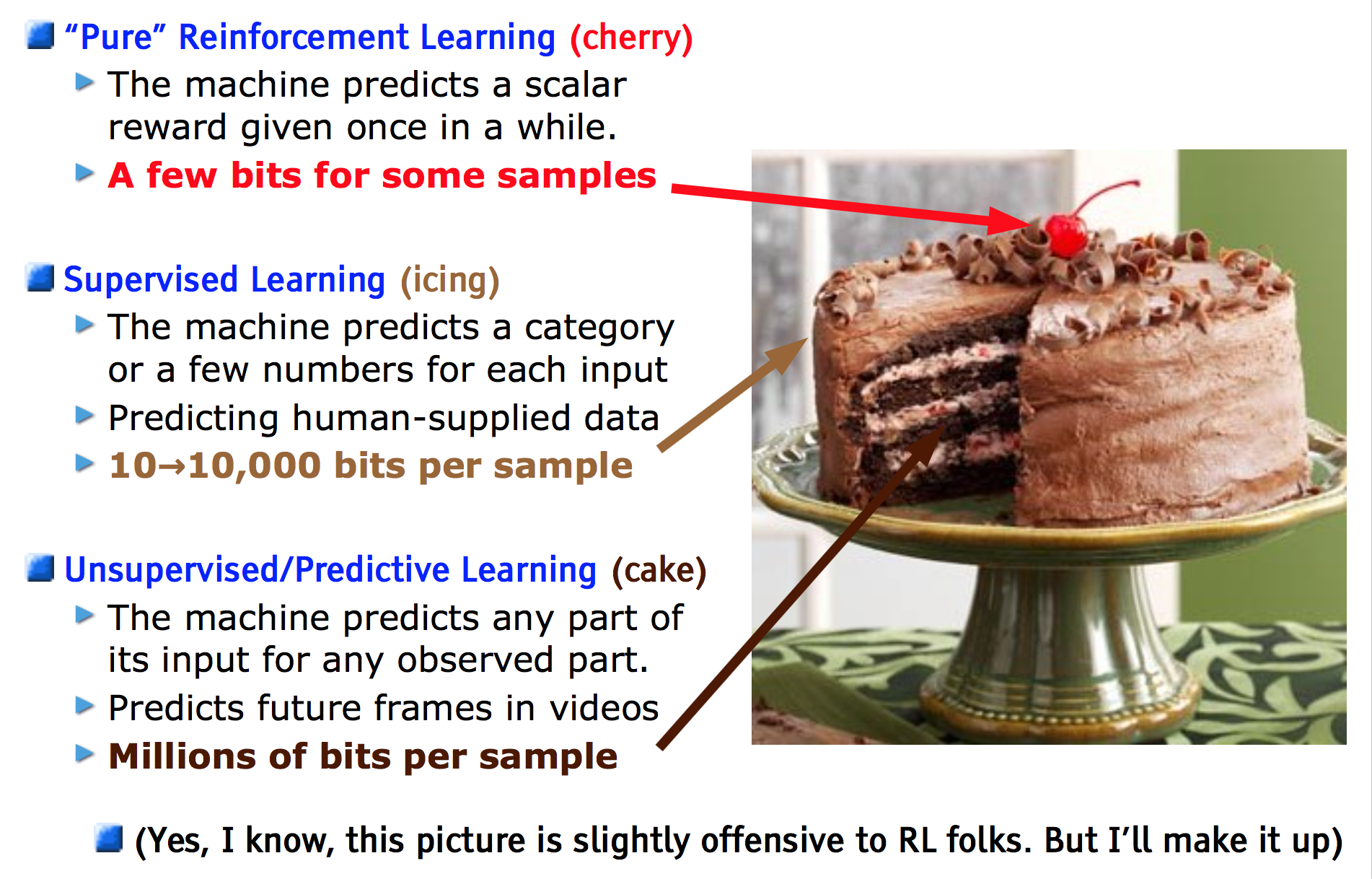

LeCun’s Cake

Yann LeCun kicked off the main conference with his opening address entitled “Predictive Learning”. It was a mostly high-level talk where he argued that we all really need to be thinking about unsupervised learning a lot more than we currently do. To make this concrete, he used a picture of a cake and suggested that things like supervised and reinforcement learning are the icing and the cherry, but that unsupervised learning was the cake itself. After this talk, LeCun’s “cake” became a meme and started popping up randomly in other NIPS presentations throughout the week. For reference here is the slide in question:

His main point being that focusing too much on supervised and reinforcement learning could be missing the most important part (i.e. the cake) of what is needed for AI, which in Yann’s opinion, is unsupervised learning. The two phrases I often hear in support of this view are “most data is unlabeled” (so we need unsupervised learning to use all this data) and “humans mostly do unsupervised learning”, so we really need to start thinking long and hard about what exactly we mean by “unsupervised” if we want this AI progress party to keep going. Yann proposes a new view of unsupervised learning which he has termed “predictive learning”, that he describes as:

Predicting any part of the past, present or future precepts from whatever information is available

which sounds a little underspecified, at least to me. He gives several instances of predictive learning, such as predicting one half of an image from the other half, and all of the fun work going on with GANs. I think I prefer the term “self-supervised” to describe stuff like this, and honestly, I’m not a huge fan of the term “predictive learning”. It sounds at first glance like a different way to say supervised learning, and so could be a source of confusion. It also it skates a little too closely to Jeff Hawkins’ description of hierarchical temporal memory (and all of the baggage that entails). But Yann is the luminary and I am not, so “predictive learning” may be the way to go.

I’m sure you can expect to see lots of pictures of LeCun’s cake being fed into deep nets as a stand-in for the unsupervised portion of a model in talks in the near future. And yes, since unsupervised learning is hard and is bound to fail in some instances, it’s also not hard to predict where this meme is headed.

Schmidhuber’s Gonna Schmidhuber

An interesting thing happened during Ian Goodfellow’s tutorial on Generative Adversarial Networks. While Ian was speaking Jurgen Schmidhuber went full Kanye, and interrupted Ian’s talk by going up to the mic and talking over him. Stephen Merity tweeted this in response:

Schmidhuber interrupted the GAN tutorial, stealing time from those listening & learning. I don't care who you are, don't do that.#nips2016 pic.twitter.com/NqCHkAynCm

— Smerity (@Smerity) December 5, 2016

and I’m sure that most would agree with Stephen’s sentiment. Schmidhuber has something of a long standing beef with the so-called “Deep Learning Mafia” of Hinton, LeCun, and Bengio. As is this case in reinforcement learning, credit assignment is difficult when establishing methodological precedence and Schmidhuber feels that he has not been given his due for some of the foundational work that has catalyzed our current deep learning renaissance. He may have a point, but surely there are better ways to handle this than interrupting someone during a tutorial (which is meant to help others learn).

Machine Learning in Healthcare

I’m going to spend the rest of the post talking about the experience at the Machine Learning for Health workshop since this is my own area of research focus. I thought the workshop was great and served as a nice coda to an energizing week in Barcelona. However, I think that the tone was markedly different from the rest of NIPS, or at least from the other sessions and symposia that I attended. While there was definitely a lot of enthusiasm, I think there is also this creeping sense that we are much further behind other areas of ML and that we have some pretty stark challenges ahead of us that must be solved.

Leo Celi (project lead on MIMIC) gave a great opening talk and did a wonderful job calibrating expectations for what is currently possible with ML in healthcare. He gave an overview of some of the problems with healthcare data and how these issues will impose limitations on what we can do with machine learning. This discussion led naturally into a larger one on the problem with most scientific data and the reproducibility crisis. Of course John Ioannidis’s seminal paper “Why Most Published Research Findings Are False” made an appearance, and served as a useful reminder that science and reasoning under uncertainty are hard, despite what the rest of NIPS might be telling us.

Sendhil Mullainathan took a look at the challenges in healthcare through the lens of an economist. Sendhil thinks, as most economists do, in terms of counterfactuals and causal inference. He spent the first part of his talk documenting his transition from inferential/causal statistics to predictive modeling, that was in many ways reminiscent of Breiman’s famous “Two Cultures” paper. His main point is that a lot of pieces we need for both causal inference and predictive modeling are often missing or pathologically incomplete in healthcare data. We either need new methods that account for this or we need to go collect better data to keep moving forward.

Neil Lawrence gave an amazing keynote on what I will call the “epistemic foundations of mathematical healthcare data science”, for definite want of a better term. Neil was a very engaging speaker who has an equally engaging blog that you should definitely check out if you haven’t before.

There was also a host of great methodological talks and posters during the workshop. Medical imaging is seeing a lot of success from deep learning and there was a conserved theme of using recurrent neural networks to model longitudinal data from the EHR. However, most of my thoughts stayed with the challenges outlined by the invited speakers.

If I were going to synthesize the points the speakers made and try to put them into some common framework, it would go something like this. Pretend you have access to an EHR and you want build a predictive model to help guide clinical decision making or help diagnose a particularly challenging clinical case. A patient is observed to be in some clinical state () via the EHR at time and the goal is to predict the next state () for that patient. Now, we tend to think of this patient’s state as being drawn from some distribution that is determined by the underlying physiology and disease process, so , where is some stochastic process that governs how the disease process evolves. The hope is that by using the EHR, we can get a lot of observations of (in the form of diagnostic history, medications, lab values, etc) that will let us infer how this process will change over time and let us make good predictions about what states the patient is likely to visit in the future.

The problem is that in reality we don’t actually observe draws from the disease process directly, but instead we observe a related quantity , where:

where is the stochastic process governing how the patient should be managed at an institutional level and is another stochastic process governing how the physician reacts to and cares for the patient, over various time horizons. So what we observe in is some unknown function that combines the disease process, the hospital/healthcare environment, and the physician who saw the patient.

One of the cleanest examples of this that I know is from work done by my PI Zak Kohane and Griffin Weber where they show that the time a lab is taken can be more informative that the actual lab value. For instance, someone with a normal white blood cell (WBC) count that was taken at 3am may have a lower chance of survival than someone who has an abnormal WBC taken at 9am. Why? Well, if the doctor ordered a WBC at 3am, it’s probably because the patient wasn’t doing very well and they needed more information to figure what exactly was going on, whereas a WBC taken at 9am is probably part of routine testing, so even if they have a low value it may less predictive of survival. Griffin terms this healthcare dynamics, which I think is a fantastic conceptual framework for thinking about this process.

Why does this matter? Unless we can safely marginalize over the things we don’t care about (perhaps and , in this case), then when we port our algorithm to new healthcare systems or have new physicians, the algorithm will probably break because the underlying distributions have changed. Even worse, if physicians start using our algorithm in practice to guide their decision making, then we could get into some type of observer effect situation, since the physicians are using the predictions of the algorithm to change their process, and thus change the underlying distribution.

All of this is enough to give anyone a pretty bad case of the howling fantods, but the task is not hopeless. It just means that we need to really think carefully about the data generating process when we work with healthcare data, or we might be mislead. If you’ve been working with healthcare data for a while, I bet none of this will be surprising to you.

There are a lot of exciting things that are starting to happen around healthcare, ML, and technology but this workshop helped remind me that there is still a lot of work to do. David Shaywitz recently wrote an article summarizing the year in Health Tech, and he opens with a nice, succinct summary of the state of affairs:

Among the key health technology lessons that 2016 has reinforced: biology is fiendishly complex; data ≠ insight; impacting health–whether making new drugs or meaningfully changing behavior–is hard; and palpable progress, experienced by patients, is starting to occur (and is tremendously exciting).

Couldn’t have said it better myself.

Summary

NIPS was fantastic, I learned a lot and had a great time. More importantly, it got me thinking critically about my own research, which is the what a good conference is supposed to do, right?

To wrap things up, going to the main conference talks left me feeling like:

whereas the healthcare workshop (which I must reiterate, was great!) had me feeling like:

Links to Slides and Videos

There are a ton of videos available through the main conference site, and I am not going to recreate that list. Here are a list to slides that may not be centrally listed or available, discovered mainly on twitter.

- Peter Abbeel, “Tutorial: Deep Reinforcement Learning through Policy Optimization” - slides

- Yoshua Bengio, “Towards a Biologically Plausible Model of Deep Learning” - slides

- Mathieu Blondel, “Higher-order Factorization Machines” - slides

- Kyle Cramer (keynote), “Machine Learning & Likelihood Free Inference in Particle Physics” - slides

- Xavier Giro, “Hierarchical Object Detection with Deep Reinforcement Learning” - slides

- Ian Goodfellow, “Adversarial Approaches to Bayesian Learning and Bayesian Approaches to Adversarial Robustness” - slides

- Ian Goodfellow, “Tutorial: Introduction to Generative Adversarial Networks” - slides

- Neil Lawrence, “Personalized Health: Challenges in Data Science” - slides

- Yann LeCun, “Energy-Based GANs & other Adversarial things” - slides

- Yann LeCun (keynote), “Predictive Learning” - slides

- Valerio Maggio, “Deep Learning for Rain and Lightning Nowcasting” - slides

- Sara Magliacane, “Joint causal inference on observational and experimental data” - slides

- Andrew Ng, “Nuts and Bolts of Building Applications using Deep Learning” - slides

- John Schulman, “The Nuts and Bolts of Deep RL Research” - slides

- Dustin Tran, “Tutorial: Variational Inference: Foundations and Modern Methods” - slides

- Jenn Wortman Vaughan, “Crowdsourcing: Beyond Label Generation” - slides

- Reza Zedah, “FusionNet: 3D Object Classification Using Multiple Data Representations” - slides

NIPS 2016 Blogroll

- Igor Carron of Nuit Blanche: Some general takeaways from #NIPS2016

- Sergey Korolev: NIPS 2016 experience and highlights

- Neil Lawrence of Inverse Probability: Post NIPS Reflections

- Paul Mineiro of Machined Learnings: NIPS 2016 Reflections